keys to productive AI

The next big thing?

Prediction models, statistical analysis, machine learning, or producing AI in general has been happening for the last centuries.

The first documented use of predictive analytics comes from Lloyd, 1698. It seeked a way to underwrite insurance for oversea travellers.

The company would accept the risk of sea voyages in return for a premium.

The premium quotes would come from historical data, something the agent had plenty of. Lloyd used data sets of past trips in order to evaluate the risk of these voyages and predict patterns of liability. Lloyds continues to use predictive models in all facets of their insurance underwriting, and the idea has become general-practice in the insurance industry.

Computers didn't even exist back then, and the process was done with a pen paper. A lot of paper. And many analysts looking through the data and contunually improving predictions. It isn't AI per se. But the method is what is at the core of AI: Modelling predictions, to drive decisions.

The big thing

For some, it may still sound that two buzzwords are put into a single sentence, AI, ML. But it is a major evolution, if not a revolution for DevOps engineering. Innovation in Development & Operational processes, tools and infrastructure is what increase productivity so dramatically that it is what makes relative small startups like Midjourney ship a constantly getting better producing AI generative pieces of arts, in the billions so far. It will be wrong to assume that none of these principals have already been applied elsewhere, in fact, all of these principles are very much in use but only in very specific environments in big tech companies.

The era of using static tooling for deployments, provisioning, packaging, monitoring and log management will be over. With the adoption of containers, micro-services, cloud and API driven approach to deploying applications at scale, and ensuring high reliability, requires a different take.

So it’s important to use the modern tooling and pipelines tailored for this user case. Appropriate use of cloud providers instead of trying to reinvent the wheel every time. With the rise of ML and AI, we will see more DevOps tooling vendors incorporating intelligence into their offerings for further simplifying the work of engineers.

When Microsoft CEO Satya Nadella talked about the use of Machine Learning for DevOps, he implied on the democratize these practices across different industries in order to maximize the technology adaption and hence increase productivity.

Before we jump into the topic of productivity and DevOps let’s have outline the context and what devops is all about.

What is DevOps?

DevOps is a set of software development practices and skillsets that combines software development - dev, and IT operations - Ops - to shorten the development life cycle - SDLC - while delivering features, and fixes, and updates frequently in close alignment with business objectives.

Different disciplines collaborate, ensuring enhanced quality, security and performance.

DevOps and productivity

SDLC has seen dramatic productivity improvement in past decades, a blessing due to widespread adoption of Agile based software delivery methodologies. At the very beginning when agile methodology was adopted, especially by enterprises, the practical results ran into some frictions

The key difference between Agile methodologies and traditional waterfall approaches is that Agile induces more frequent and incremental changes rather than large and more occasional changes, which in turn puts tremendous pressure into the delivery pipeline.

Cloud offerings partly owe their success to the increase demand for on-demand infrastructure and software services. The whole premise of the cloud environment is based on the ability to scale up quickly and replicate multiple no of times. Without the DevOps demand, a large chunk of the cloud offering simply vanishes.

What is Machine Learning?

Machine learning is the scientific study of algorithms and statistical models that computer systems use to effectively perform a specific task without using explicit instructions, relying on patterns and inference instead. It is seen as the building block of artificial intelligence as it enables machines to learn to do tasks without explicitly training them to do so. That's a pretty good definition given by Wikipedia.

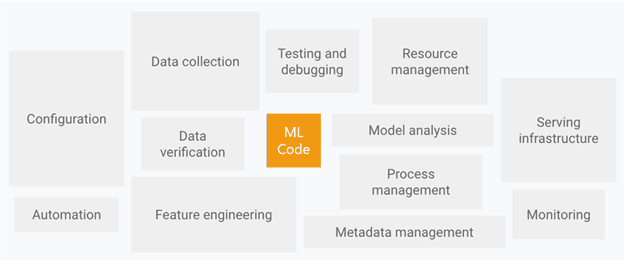

The scientific study is one thing. But then how to engineering pipelines and streamline the entire process?

Machine learning and productivity

The ris in popularity of ML algorithms and growing ecosystem of easy to use libraries has made very complex and difficult use cases of ML functions relatively simple. These utilities give tremendous power but still demand accessible, clean, and ideally labelled. An ML engineer's job is in fact mostly about getting the data ready for the algorithm, which includes data ingestion, data wrangling or cleaning.

The challenges faced by modern data pipelines are mostly solved by carefully crafting data pipelines using DevOps methodologies and practices, the term for this sub-field that has come around is: DataOps.

DataOps and Machine Learning for DevOps

Whenever there is a discussion about DevOps and ML, people often amalgame it with DataOps. They are two sides of the same coin. On one hand, DevOps practice helps better manage data pipeline while machine learning principles and algorithms help better optimize the DevOps pipeline. In other words, DataOps is a concept that leverages DevOps practices to improve the efficiency and accuracy of the data pipeline, Machine learning driven DevOps or AI- DevOps leverages machine learning techniques to improve the DevOps pipeline.

AI/ML solutions for DevOps

Many aspects of modern DevOps practices can see dramatic improvement even with the simplest form of machine learning algorithm implementation.

- Time-based predictions can be now be managed much more accurately using neural networks -

- Natural Language Processing (NLP) to enable chatbots and devices like Alexa, Siri, Cortana via the virtualization of services becoming more and more adaptive to user interactions.

- Image processing using Neural Networks - the whole premise is dependent on the tagging of the images and their recognition/ validation by automated systems. The strength of such models completely depends on the variety and the volume of these tagged images.

AI/ML DevOps can streamline this process and actually enrich the algorithm without imparting bias by itself. Following are some of the areas where AI DevOps can dramatically improve development and operational productivity:

Source code, Models and Testing

We all know that bugs will come around whenever a piece of code is changed. Developer let some bug slip through while rushing to ship features everyone wants to get asap. The important factor to keep productivity and software quality, is to catch the bugs early.

Standard workflows with automated QE checks remain upmostly useful:

- Unit and Mutation tests

- Integration validation routines

- End to End system tests through staged environments

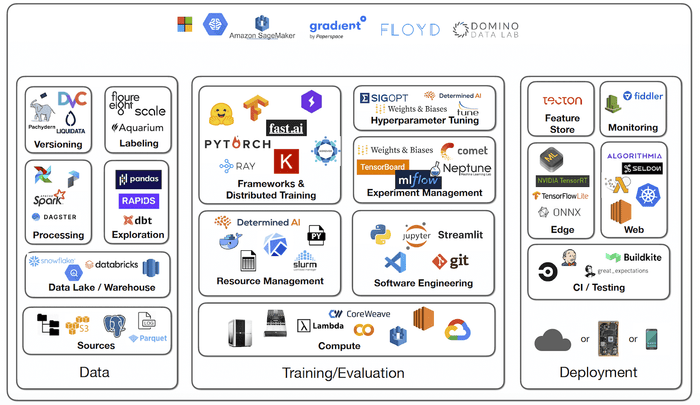

Further tooling more tailored to ML workflows step in, such as:

- Data streaming with Flink

- Training and packaging and hosting models with MLflow

- Yatai for deployment and serving

- BentoML as a unified Framework

A growing ecosystem of tools and services has been burgeoning and now expanding to increase productivity

Monitoring and alerting

Monitoring platforms now implement multiple forms of anomaly detection to catch and alert on unusual behavior. More is coming in this feld as the power of artificial intelligence and machine learning will emerge as tailored fitting. Giving end-user personalized model.

What about FinOps?

Resource management, cost and optimization.

A term to qualify the frameworks, tools and talent resources to handle this part: Finops. Cloud resource optimization solutions starts with cleaning up the resources after use then using lazy instantiation mechanisms and chasing spot market opportunities to use minimum amount of billed possible. The mechanics work pretty well, however, a data-driven approach is granted to provide even greater flexibility and cost optimization.

Predictive Model Development

Finally, sustainabilty is to look into leveraging AI/ML and operational factors mid to long term success as well.

It is one thing to develop a model. It is completely different challenge to develop a platform that handles changes in the data sets over time and that can adapt iteratively to business changes. Consumers tastes changes, the environments, constraints and regulatory requirements as well.

Many model become stale after a period of time and it takes significant resources and time to revamp and validate already made models. DevOps is also about enabling the cycle of change. Model tuning and validation in via automation and speed to enable the development and deployment of Machine learning and AI to multiple use case that change over time with ease. DevOps can handle the data flow in a manner detects and flags off any anomalies in the data while providing automated tuning. The stream of Reinforcement Machine learning is entirely dedicated to such effect.

In the meanwhile, it is set to allow engineers step in customize the pipelines depending on the immediate objectives of the business

Conclusion

Technologies and methodologies to succeed with AI/ML adoption are no strangers to the people who are already practicing it on a day to day basis, but the key here is that the next big thing is already here. It's a matter of adopting it. The ecosystem will continue to grow and expand as people's needs in predictions and assistance in decision making expand. What improves the productivity of AI solutions today is for a large part embracing and leveraging next generation DevOps principles, strategies, tooling, and tactics. Tailored for concrete use cases.